04 Jan Web Scraping: Overview and Effects of Website Scrapers

Web Scraping: Overview and Effects of Website Scrapers

Also known as web data extraction, web scraping is an automated method for extracting content from a website.

In this context, the content may be text, images, descriptions, prices, reviews, and any other information that a competitor or malicious agents may use to exert harm on your business.

The Open Web Application Security Project (OWASP) lists this practice as OAT-11. We define it as collecting application content and other data to use elsewhere.

Today, it used automated bots in web scraping. The primary advantage of using them is the speed at which they operate. A bot can peruse many web pages quickly and deliver results to its owner.

Growth of web scraping

Today, web scraping has grown to become a vast industry. Though web crawling has a positive side, many problems can also negatively affect businesses.

The industries affected are education, finance, e-commerce, entertainment, Media and publishing, social networking, to name a few.

Today, scraping has even joined the cloud. Multiple companies offer scraping as a service. Through such services, scraping has moved from the traditional sense that there was a need to have programming knowledge to scrape.

Website scraping: malicious or legit?

Businesses experience a blend of both legitimate and malicious scraping and abuse of search engines that have the characteristics shown below.

- Search queries target only one web application URI that is too perfect and fast for a human. The queries come from multiple locations.

- It uses various evasive and masking techniques that distinguish user activities like spoofing the browser, user agent rotation that is sophisticated, and forgery.

- When there are multiple queries to URIs and inventory items that do not exist, it strains your network infrastructure.

- When distributing the queries to a wide range of localities that do not match the search query locations.

The above signs collectively provide firm evidence for the malicious intent of scraping.

Why is it hard to prevent web scraping?

Today, many connected organisations face the threat of web scraping. They also face the challenges of addressing it scalable and efficient.

Web scraping has a broad impact ranging from increased spending on infrastructure to loss of proprietary business information and intellectual property.

The most difficult to prevent is web scraping of all the automated threats and attacks. Below are the reasons preventing web scraping is complicated:

Web scraping is primarily HTTP GET-based

Web scraping is affected by sending multiple HTTP GET requests to the server or URI under attack. Usually, and on a typical domain, most of the transactions are HTTP GET requests.

It means that the Bot mitigation solution that you employ must process all the HTTP GET transactions. It must hold all of them.

As a result, effects to both efficacy and scalability are introduced.

· Efficacy: Since many bot mitigation solutions rely on HTTP POST to send device fingerprinting logic, they miss most of the attack signals from HTTP GET.

· Scale: Again, most bot mitigation solutions have an appliance component designed with POST transaction capabilities. Therefore, they are not scalable. They require to be oversized significantly to handle the traffic for medium to large websites.

It can happen anywhere within a website

Unlike other automated attacks that target a specific endpoint or a particular application, we can direct web scraping to any endpoint or application within the website.

For instance, credential stuffing and account takeovers target the credential-based application, and denial of inventory target checkout applications, whereas web scraping has a broader reach.

Therefore, preventing web scraping becomes a challenge because of the broadness of the threat.

Is your mitigation solution able to handle all the public-facing applications that include the endpoints that dynamically generate the URI?

Using a tool that requires application instrumentation forces you to inject an agent on endpoints and each web application in your domain. It affects the server in the following ways:

- Injecting an agent to the webpage increases the complexities and delays to the application deployment and development workflow.

- The webpage load times may reduce the ability to add an agent and processing burdens if they generate the URI dynamically.

These attacks leverage endpoints and APIs

Using API endpoints has become an essential element in moving towards more rapid and iterative application development.

API endpoints have the same information that a partner, mobile users, and aggregators of interfaces based on the web.

When a scraper faces the web application measures to prevent scraping, it switches to the API endpoints.

The major challenge facing bot mitigation solutions in preventing web scraping at API endpoints is the lack of a page or SDK to install the agent.

The effects of web scraping

Loss of revenue

Web scraping can reduce your competitive advantage when the scraper copies your proprietary data and business plans. It causes the shrinking of a business’ customer base.

For those who earn through advertising on their web pages, the drop-in web traffic affects the earnings. This is because users may be rerouted to the site where your content is posted.

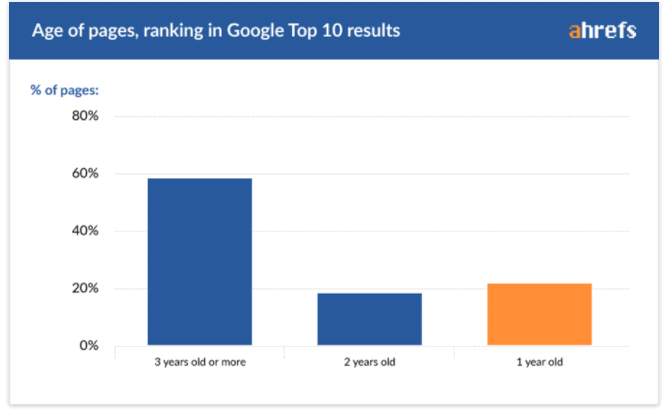

Drop-in SEO ranking

Content posted on your website forms part of your intellectual property.

When a person scrapes or misuses it, they can harm your SEO efforts to improve visibility on the search engine.

Since search engines prioritise originality, your search engine visibility gets downgraded sometimes, and the scraper ends up at a higher rank on the SERP than your business.

Skewed analytics

A business requires accurate analytics to make the right decisions. The web and marketing teams heavily rely on them and include bounce rates, page views, demographics, and much more.

A scraper bot distorts your analytical data. Hence, you cannot be able to forecast or predict future occurrences. It is a stumbling block to proper decision-making.

Conclusion

Web scraping has been a norm for some time. We can use it for good or for malicious intent. Getting permission before a scraper copies content is necessary regardless of the intent.

Preventing this form of attack is difficult because of the above factors. To avoid the above impacts and many more, there is a need to enlist a dedicated bot management solution.

The post Web Scraping: Overview and Effects of Website Scrapers is by Stuart and appeared first on Inkbot Design.